MBI Videos

TGDA@OSU TRIPODS Center Workshop: Theory and Foundations of TGDA

-

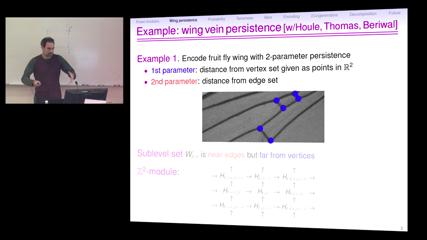

Edgar Lobaton

Edgar LobatonTopological Data Analysis has been applied to a number of problems in order to study the geometric and topological structure of point cloud data. In this talk, we discuss their application to the analysis of time series from cyborg-insect to human motion. These tools allow us to identify different type of motion behaviors from the agents, and help us discover the geometric structure of their surroundings. The mathematical framework developed allows us to derive bounds on the sampling of the data space in order to ensure recovery of the correct topological information, and also provide guarantees on the robustness of these quantities to perturbations on the data.

-

David Mount

David MountRecently, a series of seminal results has established the central role that convex approximation plays in solving a number of computational problems in Euclidean spaces of constant dimension. These include fundamental applications such as computing the diameter of a point set, Euclidean minimum spanning trees, bichromatic closest pairs, width kernels, and nearest neighbor searching. In each case, an approximation parameter eps > 0 is given, and the problem is to compute a solution that is within a factor of 1+eps of the exact solution.

In this talk, I will present recent approaches to convex approximation. These approaches achieve efficiency by being locally sensitive to the shape and curvature of the body. This is achieved through a combination of methods, both new and classical, including Delone sets in the Hilbert metric, Macbeath regions, and John ellipsoids. We will explore the development of these methods and discuss how they can be applied to the above problems.

-

Ezra Miller

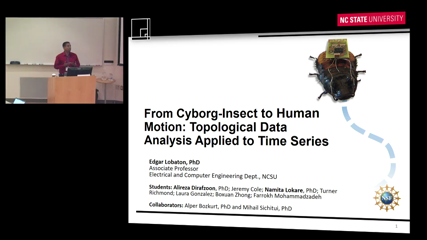

Ezra MillerPersistent homology with multiple continuous parameters presents fundamental challenges different from those arising with one real or multiple discrete parameters: no existing algebraic theory applies (even poorly or inadequately). In part that is because the relevant modules are wildly infinitely generated. This talk explains how and why real multiparameter persistence should nonetheless be practical for data science applications. The key is a finiteness condition that encodes topological tameness -- which occurs in all modules arising from data -- robustly, in equivalent combinatorial and homological algebraic ways. Out of the tameness condition surprisingly falls much of ordinary (that is, noetherian) commutative algebra, crucially including finite minimal primary decomposition and a concept of minimal generator. The geometry and relevance of these algebraic notions will be explained from scratch, assuming no prior experience with commutative algebra, in the context of two genuine motivating applications: summarizing probability distributions and topology of fruit fly wing veins.

-

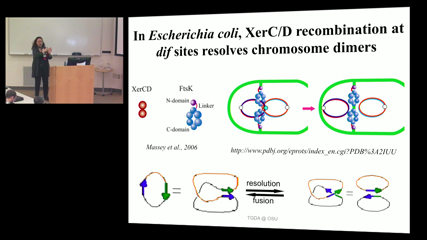

Mariel Vazquez

Mariel VazquezIn Escherichia coli DNA replication yields two interlinked circular chromosomes. Returning the chromosomes to an unlinked monomeric state is essential to cell survival. Simplification of DNA topology is mediated by enzymes, such as recombinases and topoisomerases. Recombinases act by a local reconnection event. We investigate analytically minimal pathways of unlinking by local reconnection. We introduce a Monte Carlo method to simulate local reconnection, provide a quantitative measure to distinguish among pathways and identify a most probable pathway. These results point to a universal property relevant to any local reconnection event between two sites along one or two circles.

-

Paul Bendich

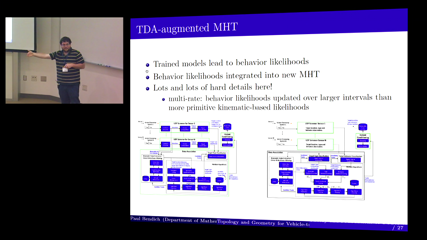

Paul BendichMany systems employ sensors to interpret the environment.

The target-tracking task is to gather sensor data from the environment and then to partition these data into tracks that are produced by the same target. A key challenge is to "connect-the-dots": more precisely, to take a sensor observation at a given time and associate it with a previously-existing track (or to declare that this is a new object). This can be very challenging, especially when there are multiple targets present, and when different sensors "go blind" at various times. There are a number of high-level designs for multi-target multi-sensor tracking systems, but many contemporary ones fit within the multiple hypothesis (MHT) paradigm. Typical MHTs formulate the 'connect-the-dots' problem as one of Bayesian inference, with competing multi-track hypotheses receiving scores.

This talk surveys three recent efforts to integrate topological and geometric information into the formula for computing hypothesis scores. The first uses zero-dimensional persistent homology summaries of kinematic information in car tracking, and the second uses persistent homology summaries in state space to form grouping hypotheses for nautical traffic. Finally, a method using self-similarity matrices is employed to make useful cross-modal comparisons in heterogeneous sensor networks. In all three efforts, large improvements in MHT performance are observed.

This talk is based on work funded by OSD, NRO, AFRL, and NSF, and it was done with many collaborators, including Nathan Borggren, Sang Chin, Jesse Clarke, Jonathan deSena, John Harer, Jay Hineman, Elizabeth Munch, Andrew Newman, Alex Pieloch, David Porter, David Rouse, Nate Strawn, Christopher J. Tralie, Adam Watkins, Michael Williams, and Peter Zulch.

-

Carola Wenk

Carola WenkPersistent homology is commonly applied to study the shape of high dimensional data sets. In this talk we consider two low-dimensional data scenarios in which both geometry and topology are of importance. We study 2D and 3D images of prostate cancer tissue, in which glands form cycles or voids. Treatment decisions for prostate cancer patients are largely based on the pathological analysis of the geometry and topology of these data. As a second scenario we consider the comparison of road networks.

-

Laxmi Parida

Laxmi ParidaMany problems in genomics are combinatorial in nature, i.e., the interrelationship amongst the entities is at par, if not more important, than the value of the entity themselves.

Graphs are the most commonly used mathematical object to model such relationships. However, often it is important to capture not just pairwise, but more general $k$-wise relationships. TDA provides a natural basis to model such interactions and, additionally, it provides mechanisms for extracting signal patterns from noisy data.

I will discuss two applications of this model, one to population genomics and the other to meta-genomics. In the former, we model the problem of detecting admixture in populations and in the latter we deal with the problem of detecting false positives in microbiome data.

The unifying theme is the use of topological methods -- in particular, persistent homology. I will explain the underlying mathematical models and describe the results obtained in both cases.

-

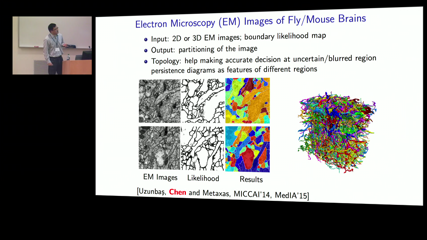

Chao Chen

Chao ChenComputing the optimal cycle of a given homology class is a challenging problem. Existing algorithms, although polynomial, are far from practical. We propose a new algorithm based on the A-star search. A carefully designed heuristic function is proposed to guide the search for the optimal solution. Our method is the first to be practical in large scale image analysis tasks. We apply the method to cardiac image analysis, in which we use persistent homology to detect delicate muscle structures and compute their optimal representations. We believe the method can be applied to many other topology data analysis contexts, where we want to annotate the persistence diagram with additional geometric information.

-

Samory Kpotufe

Samory KpotufeEstimating the mode or modal-sets (i.e. extrema points or surfaces) of an unknown density from sample is a basic problem in data analysis. Such estimation is relevant to other problems such as clustering, outlier detection, or can simply serve to identify low-dimensional structures in high dimensional-data (e.g. point-cloud data from medical-imaging, astronomy, etc). Theoretical work on mode-estimation has largely concentrated on understanding its statistical difficulty, while less attention has been given to implementable procedures. Thus, theoretical estimators, which are often statistically optimal, are for the most part hard to implement. Furthermore for more general modal-sets (general extrema of any dimension and shape) much less is known, although various existing procedures (e.g. for manifold-denoising or density-ridge estimation) have similar practical aim. I’ll present two related contributions of independent interest: (1) practical estimators of modal-sets – based on particular subgraphs of a k-NN graph – which attain minimax-optimal rates under surprisingly general distributional conditions; (2) high-probability finite sample rates for k-NN density estimation which is at the heart of our analysis. Finally, I’ll discuss recent successful work towards the deployment of these modal-sets estimators for various clustering applications.

Much of the talk is based on a series of work with collaborators S. Dasgupta, K. Chaudhuri, U. von Luxburg, and Heinrich Jiang.

-

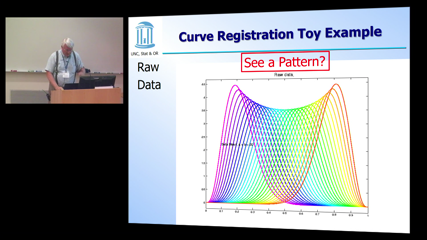

J. S. Marron

J. S. MarronObject Oriented Data Analysis is the statistical analysis of populations of complex objects. This is seen to be particularly relevant in the Big Data era, where it is argued that an even larger scale challenge is Complex Data. Data objects with a geometric structure constitute a particularly active current research area. This is illustrated using a number of examples where data objects naturally lie in manifolds and spaces with a manifold stratification. An overview of the area is given, with careful attention to vectors of angles, i.e. data objects that naturally lie on torus spaces. Prinicpal Nested Submanifolds, which are generalizations of flags, are proposed to provide new analogs of Principal Component Analysis for data visualization. Open problems as to how to weight components in a simultaneous fitting procedure are discussed.

-

Peter Bubenik

Peter BubenikPersistence modules are a central algebraic object arising in topological data analysis. Interleaving provides a natural way to measure distances between persistence modules. We consider various classes of persistence modules and the relationships between them. We also study the resulting topological spaces and their basic topological properties.

-

Ulrich Bauer

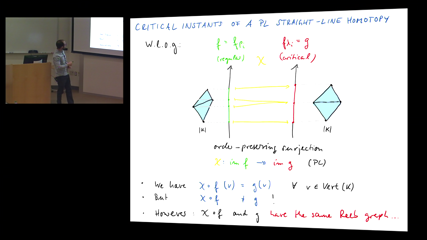

Ulrich BauerWe consider the setting of Reeb graphs of piecewise linear functions and study distances between them that are stable, meaning that functions which are similar in the supremum norm ought to have similar Reeb graphs. We define an edit distance for Reeb graphs and prove that it is stable and universal, meaning that it provides an upper bound to any other stable distance. In contrast, via a specific construction, we show that the interleaving distance and the functional distortion distance on Reeb graphs are not universal.

(Joint work with Claudia Landi and Facundo Mémoli)