-

Qingyuan Zhao

In this talk I will introduce a marginal sensitivity model for IPW estimators which is a natural extension of Rosenbaum’s sensitivity model for matched observational studies. The goal is to construct confidence intervals based on inverse probability weighting estimators, such that the intervals have asymptotically nominal coverage of the estimand whenever the data generating distribution is in the collection of marginal sensitivity models. I will use a percentile bootstrap and a generalized minimax/maximin inequality to transform this intractable problem to a linear fractional programming problem, which can be solved very efficiently. I will illustrate our method using a real dataset to estimate the causal effect of fish consumption on blood mercury level.

-

Sameer Deshpande

There has been increasing concern in both the scientific community and the wider public about the short- and long-term consequences of playing American-style tackle football in adolescence. In this talk, I will discuss several matched observational studies that attempt to uncover this relationship using data from large longitudinal studies. Particular emphasis will be placed on discussing our general study design and analysis plan. I will also discuss limitations of our approach and outline how we might address these from a Bayesian perspective.

-

Georgia Papadogeorgou

Propensity score matching is a common tool for adjusting for observed confounding in observational studies, but is known to have limitations in the presence of unmeasured confounding. In many settings, researchers are confronted with spatially-indexed data where the relative locations of the observational units may serve as a useful proxy for unmeasured confounding that varies according to a spatial pattern. We develop a new method, termed distance adjusted propensity score matching (DAPSm) that incorporates information on units’ spatial proximity into a propensity score matching procedure. DAPSm provides a framework for augmenting a “standard� propensity score analysis with information on spatial proximity and provides a transparent and principled way to assess the relative trade-offs of prioritizing observed confounding adjustment versus spatial proximity adjustment. The method is motivated by and applied to a comparative effectiveness investigation of power plant emission reduction technologies designed to reduce population exposure to ambient ozone pollution.

-

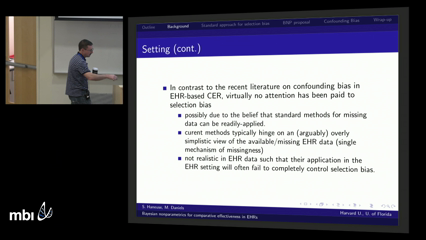

Mike Daniels

N/A

-

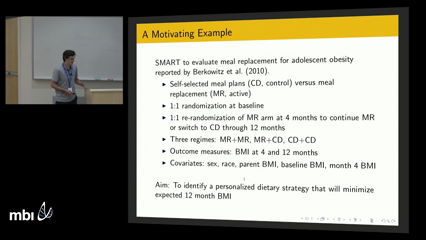

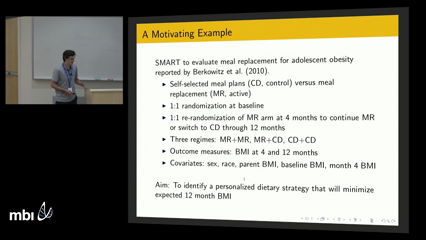

Thomas Murray

Medical therapy often consists of multiple stages, with a treatment chosen by the physician at each stage based on the patient's history of treatments and clinical outcomes. These decisions can be formalized as a dynamic treatment regime. This talk describes a new approach for optimizing dynamic treatment regimes that bridges the gap between Bayesian inference and Q-learning. The proposed approach fits a series of Bayesian regression models, one for each stage, in reverse sequential order. Each model uses as a response variable the remaining payoff assuming optimal actions are taken at subsequent stages, and as covariates the current history and relevant actions at that stage. The key difficulty is that the optimal decision rules at subsequent stages are unknown, and even if these optimal decision rules were known the payoff under the subsequent optimal action(s) may be counterfactual. However, posterior distributions can be derived from the previously fitted regression models for the optimal decision rules and the counterfactual payoffs under a particular set of rules. The proposed approach uses imputation to average over these posterior distributions when fitting each regression model. An efficient sampling algorithm, called the backwards induction Gibbs (BIG) sampler, for estimation is presented, along with simulation study results that compare implementations of the proposed approach with Q-learning.

-

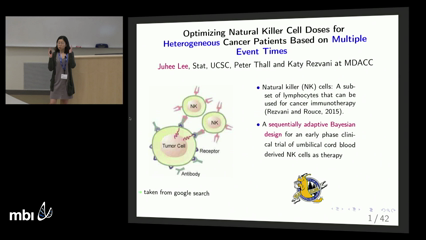

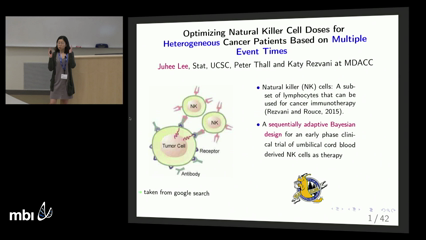

Juhee Lee

A sequentially adaptive Bayesian design is presented for a clinical trial of cord blood derived natural killer cells to treat severe hematologic malignancies. Given six prognostic subgroups defined by disease type and severity, the goal is to optimize cell dose in each subgroup. The trial has five co-primary outcomes, the times to severe toxicity, cytokine release syndrome, disease progression or response, and death. The design assumes a multivariate Weibull regression model, with marginals depending on dose, subgroup, and patient frailties that induce association among the event times. Utilities of all possible combinations of the nonfatal outcomes over the first 100 days following cell infusion are elicited, with posterior mean utility used as a criterion to optimize dose. For each subgroup, the design stops accrual to doses having an unacceptably high death rate, and at the end of the trial selects the optimal safe dose. A simulation study is presented to validate the design’s safety, ability to identify optimal doses, and robustness, and to compare it to a simplified design that ignores patient heterogeneity.

-

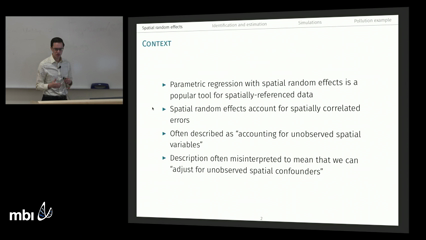

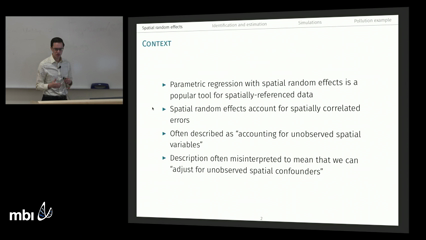

Patrick Schnell

Confounding by unmeasured spatial variables has been a recent interest in both spatial statistics and causal inference literature, but the concepts and approaches have remained largely separated. We aim to add a link between these branches of statistics by considering unmeasured spatial confounding within a formal causal inference framework, and estimating effects using outcome regression tools popular within the spatial statistics literature. We show that the common approach of using spatially correlated random effects does not mitigate bias due to spatial confounding, and present a set of assumptions that can be used to do so. Based on these assumptions and a conditional autoregressive model for spatial random effects, we propose an affine estimator which addresses this deficiency, and illustrate its application to causes of fine particulate matter concentration in New England.

-

Yanxun Xu

Estimating individual treatment effects is crucial for individualized or precision medicine. In reality, however, there is no way to obtain both the treated and untreated outcomes from the same person at the same time. An approximation can be obtained from randomized controlled trials (RCTs). Despite the limitations that randomizations are usually expensive, impractical or unethical, pre-specified variables may still not fully incorporate all the relevant characteristics capturing individual heterogeneity in treatment response. In this work, we use non-experimental data; we model heterogenous treatment effects in the studied population and provide a Bayesian estimator of the individual treatment response. More specifically, we develop a novel Bayesian nonparametric (BNP) method that leverages the G-computation formula to adjust for time-varying confounding in observational data, and it flexibly models sequential data to provide posterior inference over the treatment response at both group level and individual level. On a challenging dataset containing time series from patients admitted to intensive care unit (ICU), our approach reveals that these patients have heterogenous responses to the treatments used in managing kidney function. We also show that on held out data the resulting predicted outcome in response to treatment (or no treatment) is more accurate than alternative approaches.

-

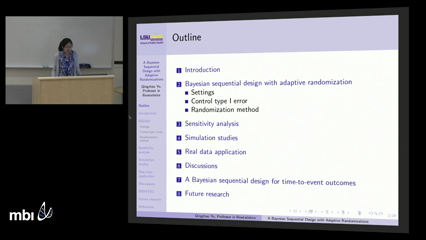

Qingyuan Zhao

Bayesian sequential and adaptive randomization designs are gaining popularity in clinical trials thanks to their potentials to reduce the number of required participants and save resources. We propose a Bayesian sequential design with adaptive randomization rates so as to more efficiently attribute newly recruited patients to different treatment arms. In this talk, we consider two-arm clinical trials. Patients are allocated to the two arms with a randomization rate to achieve minimum variance for the test statistic. Alpha spending function is used to control the overall type I error of the hypothesis testing. Algorithms are presented to calculate the optimal randomization rate, critical values, and power for the proposed design. Sensitivity analysis is implemented to check the influence on design by changing the prior distributions. Simulation studies are applied to compare the proposed method and traditional methods in terms of power and actual sample sizes. Simulations show that, when total sample size is fixed, the proposed design can obtain greater power and/or cost smaller actual sample size than the traditional Bayesian sequential design. Finally, we apply the proposed method to a real data set and compare the results with the Bayesian sequential design without adaptive randomization in terms of sample sizes. The proposed method can further reduce required sample size.

-

N/A

-

N/A

Qingyuan ZhaoIn this talk I will introduce a marginal sensitivity model for IPW estimators which is a natural extension of Rosenbaum’s sensitivity model for matched observational studies. The goal is to construct confidence intervals based on inverse probability weighting estimators, such that the intervals have asymptotically nominal coverage of the estimand whenever the data generating distribution is in the collection of marginal sensitivity models. I will use a percentile bootstrap and a generalized minimax/maximin inequality to transform this intractable problem to a linear fractional programming problem, which can be solved very efficiently. I will illustrate our method using a real dataset to estimate the causal effect of fish consumption on blood mercury level.

Qingyuan ZhaoIn this talk I will introduce a marginal sensitivity model for IPW estimators which is a natural extension of Rosenbaum’s sensitivity model for matched observational studies. The goal is to construct confidence intervals based on inverse probability weighting estimators, such that the intervals have asymptotically nominal coverage of the estimand whenever the data generating distribution is in the collection of marginal sensitivity models. I will use a percentile bootstrap and a generalized minimax/maximin inequality to transform this intractable problem to a linear fractional programming problem, which can be solved very efficiently. I will illustrate our method using a real dataset to estimate the causal effect of fish consumption on blood mercury level. Sameer DeshpandeThere has been increasing concern in both the scientific community and the wider public about the short- and long-term consequences of playing American-style tackle football in adolescence. In this talk, I will discuss several matched observational studies that attempt to uncover this relationship using data from large longitudinal studies. Particular emphasis will be placed on discussing our general study design and analysis plan. I will also discuss limitations of our approach and outline how we might address these from a Bayesian perspective.

Sameer DeshpandeThere has been increasing concern in both the scientific community and the wider public about the short- and long-term consequences of playing American-style tackle football in adolescence. In this talk, I will discuss several matched observational studies that attempt to uncover this relationship using data from large longitudinal studies. Particular emphasis will be placed on discussing our general study design and analysis plan. I will also discuss limitations of our approach and outline how we might address these from a Bayesian perspective. Georgia PapadogeorgouPropensity score matching is a common tool for adjusting for observed confounding in observational studies, but is known to have limitations in the presence of unmeasured confounding. In many settings, researchers are confronted with spatially-indexed data where the relative locations of the observational units may serve as a useful proxy for unmeasured confounding that varies according to a spatial pattern. We develop a new method, termed distance adjusted propensity score matching (DAPSm) that incorporates information on units’ spatial proximity into a propensity score matching procedure. DAPSm provides a framework for augmenting a “standard� propensity score analysis with information on spatial proximity and provides a transparent and principled way to assess the relative trade-offs of prioritizing observed confounding adjustment versus spatial proximity adjustment. The method is motivated by and applied to a comparative effectiveness investigation of power plant emission reduction technologies designed to reduce population exposure to ambient ozone pollution.

Georgia PapadogeorgouPropensity score matching is a common tool for adjusting for observed confounding in observational studies, but is known to have limitations in the presence of unmeasured confounding. In many settings, researchers are confronted with spatially-indexed data where the relative locations of the observational units may serve as a useful proxy for unmeasured confounding that varies according to a spatial pattern. We develop a new method, termed distance adjusted propensity score matching (DAPSm) that incorporates information on units’ spatial proximity into a propensity score matching procedure. DAPSm provides a framework for augmenting a “standard� propensity score analysis with information on spatial proximity and provides a transparent and principled way to assess the relative trade-offs of prioritizing observed confounding adjustment versus spatial proximity adjustment. The method is motivated by and applied to a comparative effectiveness investigation of power plant emission reduction technologies designed to reduce population exposure to ambient ozone pollution. Mike DanielsN/A

Mike DanielsN/A Thomas MurrayMedical therapy often consists of multiple stages, with a treatment chosen by the physician at each stage based on the patient's history of treatments and clinical outcomes. These decisions can be formalized as a dynamic treatment regime. This talk describes a new approach for optimizing dynamic treatment regimes that bridges the gap between Bayesian inference and Q-learning. The proposed approach fits a series of Bayesian regression models, one for each stage, in reverse sequential order. Each model uses as a response variable the remaining payoff assuming optimal actions are taken at subsequent stages, and as covariates the current history and relevant actions at that stage. The key difficulty is that the optimal decision rules at subsequent stages are unknown, and even if these optimal decision rules were known the payoff under the subsequent optimal action(s) may be counterfactual. However, posterior distributions can be derived from the previously fitted regression models for the optimal decision rules and the counterfactual payoffs under a particular set of rules. The proposed approach uses imputation to average over these posterior distributions when fitting each regression model. An efficient sampling algorithm, called the backwards induction Gibbs (BIG) sampler, for estimation is presented, along with simulation study results that compare implementations of the proposed approach with Q-learning.

Thomas MurrayMedical therapy often consists of multiple stages, with a treatment chosen by the physician at each stage based on the patient's history of treatments and clinical outcomes. These decisions can be formalized as a dynamic treatment regime. This talk describes a new approach for optimizing dynamic treatment regimes that bridges the gap between Bayesian inference and Q-learning. The proposed approach fits a series of Bayesian regression models, one for each stage, in reverse sequential order. Each model uses as a response variable the remaining payoff assuming optimal actions are taken at subsequent stages, and as covariates the current history and relevant actions at that stage. The key difficulty is that the optimal decision rules at subsequent stages are unknown, and even if these optimal decision rules were known the payoff under the subsequent optimal action(s) may be counterfactual. However, posterior distributions can be derived from the previously fitted regression models for the optimal decision rules and the counterfactual payoffs under a particular set of rules. The proposed approach uses imputation to average over these posterior distributions when fitting each regression model. An efficient sampling algorithm, called the backwards induction Gibbs (BIG) sampler, for estimation is presented, along with simulation study results that compare implementations of the proposed approach with Q-learning. Juhee LeeA sequentially adaptive Bayesian design is presented for a clinical trial of cord blood derived natural killer cells to treat severe hematologic malignancies. Given six prognostic subgroups defined by disease type and severity, the goal is to optimize cell dose in each subgroup. The trial has five co-primary outcomes, the times to severe toxicity, cytokine release syndrome, disease progression or response, and death. The design assumes a multivariate Weibull regression model, with marginals depending on dose, subgroup, and patient frailties that induce association among the event times. Utilities of all possible combinations of the nonfatal outcomes over the first 100 days following cell infusion are elicited, with posterior mean utility used as a criterion to optimize dose. For each subgroup, the design stops accrual to doses having an unacceptably high death rate, and at the end of the trial selects the optimal safe dose. A simulation study is presented to validate the design’s safety, ability to identify optimal doses, and robustness, and to compare it to a simplified design that ignores patient heterogeneity.

Juhee LeeA sequentially adaptive Bayesian design is presented for a clinical trial of cord blood derived natural killer cells to treat severe hematologic malignancies. Given six prognostic subgroups defined by disease type and severity, the goal is to optimize cell dose in each subgroup. The trial has five co-primary outcomes, the times to severe toxicity, cytokine release syndrome, disease progression or response, and death. The design assumes a multivariate Weibull regression model, with marginals depending on dose, subgroup, and patient frailties that induce association among the event times. Utilities of all possible combinations of the nonfatal outcomes over the first 100 days following cell infusion are elicited, with posterior mean utility used as a criterion to optimize dose. For each subgroup, the design stops accrual to doses having an unacceptably high death rate, and at the end of the trial selects the optimal safe dose. A simulation study is presented to validate the design’s safety, ability to identify optimal doses, and robustness, and to compare it to a simplified design that ignores patient heterogeneity. Patrick SchnellConfounding by unmeasured spatial variables has been a recent interest in both spatial statistics and causal inference literature, but the concepts and approaches have remained largely separated. We aim to add a link between these branches of statistics by considering unmeasured spatial confounding within a formal causal inference framework, and estimating effects using outcome regression tools popular within the spatial statistics literature. We show that the common approach of using spatially correlated random effects does not mitigate bias due to spatial confounding, and present a set of assumptions that can be used to do so. Based on these assumptions and a conditional autoregressive model for spatial random effects, we propose an affine estimator which addresses this deficiency, and illustrate its application to causes of fine particulate matter concentration in New England.

Patrick SchnellConfounding by unmeasured spatial variables has been a recent interest in both spatial statistics and causal inference literature, but the concepts and approaches have remained largely separated. We aim to add a link between these branches of statistics by considering unmeasured spatial confounding within a formal causal inference framework, and estimating effects using outcome regression tools popular within the spatial statistics literature. We show that the common approach of using spatially correlated random effects does not mitigate bias due to spatial confounding, and present a set of assumptions that can be used to do so. Based on these assumptions and a conditional autoregressive model for spatial random effects, we propose an affine estimator which addresses this deficiency, and illustrate its application to causes of fine particulate matter concentration in New England. Yanxun XuEstimating individual treatment effects is crucial for individualized or precision medicine. In reality, however, there is no way to obtain both the treated and untreated outcomes from the same person at the same time. An approximation can be obtained from randomized controlled trials (RCTs). Despite the limitations that randomizations are usually expensive, impractical or unethical, pre-specified variables may still not fully incorporate all the relevant characteristics capturing individual heterogeneity in treatment response. In this work, we use non-experimental data; we model heterogenous treatment effects in the studied population and provide a Bayesian estimator of the individual treatment response. More specifically, we develop a novel Bayesian nonparametric (BNP) method that leverages the G-computation formula to adjust for time-varying confounding in observational data, and it flexibly models sequential data to provide posterior inference over the treatment response at both group level and individual level. On a challenging dataset containing time series from patients admitted to intensive care unit (ICU), our approach reveals that these patients have heterogenous responses to the treatments used in managing kidney function. We also show that on held out data the resulting predicted outcome in response to treatment (or no treatment) is more accurate than alternative approaches.

Yanxun XuEstimating individual treatment effects is crucial for individualized or precision medicine. In reality, however, there is no way to obtain both the treated and untreated outcomes from the same person at the same time. An approximation can be obtained from randomized controlled trials (RCTs). Despite the limitations that randomizations are usually expensive, impractical or unethical, pre-specified variables may still not fully incorporate all the relevant characteristics capturing individual heterogeneity in treatment response. In this work, we use non-experimental data; we model heterogenous treatment effects in the studied population and provide a Bayesian estimator of the individual treatment response. More specifically, we develop a novel Bayesian nonparametric (BNP) method that leverages the G-computation formula to adjust for time-varying confounding in observational data, and it flexibly models sequential data to provide posterior inference over the treatment response at both group level and individual level. On a challenging dataset containing time series from patients admitted to intensive care unit (ICU), our approach reveals that these patients have heterogenous responses to the treatments used in managing kidney function. We also show that on held out data the resulting predicted outcome in response to treatment (or no treatment) is more accurate than alternative approaches. Qingyuan ZhaoBayesian sequential and adaptive randomization designs are gaining popularity in clinical trials thanks to their potentials to reduce the number of required participants and save resources. We propose a Bayesian sequential design with adaptive randomization rates so as to more efficiently attribute newly recruited patients to different treatment arms. In this talk, we consider two-arm clinical trials. Patients are allocated to the two arms with a randomization rate to achieve minimum variance for the test statistic. Alpha spending function is used to control the overall type I error of the hypothesis testing. Algorithms are presented to calculate the optimal randomization rate, critical values, and power for the proposed design. Sensitivity analysis is implemented to check the influence on design by changing the prior distributions. Simulation studies are applied to compare the proposed method and traditional methods in terms of power and actual sample sizes. Simulations show that, when total sample size is fixed, the proposed design can obtain greater power and/or cost smaller actual sample size than the traditional Bayesian sequential design. Finally, we apply the proposed method to a real data set and compare the results with the Bayesian sequential design without adaptive randomization in terms of sample sizes. The proposed method can further reduce required sample size.

Qingyuan ZhaoBayesian sequential and adaptive randomization designs are gaining popularity in clinical trials thanks to their potentials to reduce the number of required participants and save resources. We propose a Bayesian sequential design with adaptive randomization rates so as to more efficiently attribute newly recruited patients to different treatment arms. In this talk, we consider two-arm clinical trials. Patients are allocated to the two arms with a randomization rate to achieve minimum variance for the test statistic. Alpha spending function is used to control the overall type I error of the hypothesis testing. Algorithms are presented to calculate the optimal randomization rate, critical values, and power for the proposed design. Sensitivity analysis is implemented to check the influence on design by changing the prior distributions. Simulation studies are applied to compare the proposed method and traditional methods in terms of power and actual sample sizes. Simulations show that, when total sample size is fixed, the proposed design can obtain greater power and/or cost smaller actual sample size than the traditional Bayesian sequential design. Finally, we apply the proposed method to a real data set and compare the results with the Bayesian sequential design without adaptive randomization in terms of sample sizes. The proposed method can further reduce required sample size. N/A

N/A N/A

N/A